Introduction

I got an opportunity to work on a project recently in which one of the requirements was to analyze the sentiment on a given corpus of text data. This called for researching the subject matter. In this two-part blog series, I am going to share some observations.

- It is a Natural Language Processing task. Sentiment Analysis refers to finding patterns in data and inferring the emotion of the given piece of information, which could be classified into one of these categories:

- Negative

- Neutral

- Positive

- We are truly living in the information age where both humans and machines generate data at an unprecedented rate; therefore, it’s nearly impossible to gain insights into such data to make intelligent decisions manually. One such insight is assessing/calculating the sentiment of a big (rather huge) dataset.

- Software to the rescue yet again. One way of assessing, rather than calculating, the emotion on top of such a huge dataset is to employ sentiment analysis techniques (more on those later).

- Market sentiment—A huge amount of data is generated in the global financial world every second. Investors must retain such information to manage their portfolios more effectively. One way of gauging market sentiment is by implementing sentiment-analyzing tools.

- Gauging the popularity of products, books, movies, songs, and services by analyzing data generated from different sources such as tweets, reviews, feedback forums, gestures (swipes, likes), etc.

The following diagram shows a summary of the analysis life cycle:

Now that we know what sentiment analysis is on a broader level, let’s dig deeper. Analyzing data can be tricky because the data, in almost all cases, is unstructured, opinionated, and, in some cases, incomplete- meaning it addresses only a fraction of the subject matter and, therefore, unsuitable for calculating the emotion.

This data must be cleaned for it to be turned into something actionable. In most cases, it is intended to focus on deducing the polarity (good or bad, spam or ham, black or white, etc.) from the given piece of information, as the opinions can be nuanced and lead to ambiguity. Put another way, the “problem” should be framed in a certain way so that a binary classification (polarity) can be deduced from it. Now, I know most of you will say, what about that “neutral” classification between “negative” and “positive” classes? Fair question.

Well, the answer is, if the deduction/calculation comes out to be somewhere in the middle of 0 (negative) and 1 (positive), let’s say 0.5. It is obvious that the given piece of information, also known as problem instance, can neither be classified as negative nor positive. Therefore, the classification of neutral seems more appropriate.

On a higher level, two techniques can be used for performing sentiment analysis in an automated manner: rule-based and Machine learning-based. I will explore the former in this blog and take up the latter in part 2 of the series.

Rule Based

Rule-based sentiment analysis refers to a study conducted by language experts. The outcome of this study is a set of rules (also known as lexicon or sentiment lexicon) according to which the words classified are either positive or negative, along with their corresponding intensity measure.

Generally, the following steps are needed to be performed while applying the rule-based approach:

- Extract the data

- Tokenize text. The task of splitting the text into individual words.

- Stop word removal. Those words do not have significant meaning and should not be used for the analysis activity. Examples of stop words are a, an, the, they, while, etc.

- Punctuation removal (in some cases)

- Running the preprocessed text against the sentiment lexicon should provide the number/measurement corresponding to the inferred emotion.

Example:

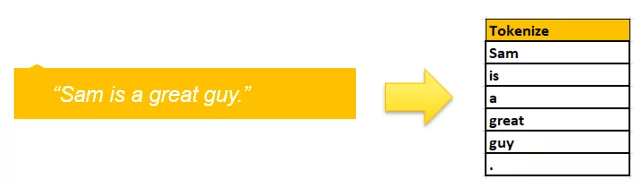

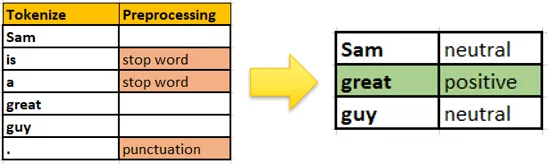

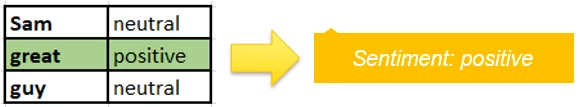

Consider this problem instance: “Sam is a great guy.”

- Tokenize

- Remove stop words and punctuations.

- Running the lexicon on the preprocessed data returns a positive sentiment score/measurement because of the presence of the positive word “great” in the input data.

Empower your Business with AI!

Ready to transform your analytics with Artificial Intelligence? Discover how AlphaBOLD’s AI services can refine your sentiment analysis capabilities. Enhance your data-driven strategies with cutting-edge AI solutions.

Request a Consultation- This is a very simple example. Real-world data is much more complex and nuanced. For example, your problem sentiment may contain sarcasm (where seemingly positive words carry negative meaning or vice versa), shorthand, abbreviations, different spellings (e.g., flavor vs. flavor), misspelled words, punctuation (especially question marks), slang, and, of course, emojis.

- To tackle the complex data for analysis is to make use of sophisticated lexicons that can take into consideration the intensity of the words (e.g., if a word is positive, then how positive it is? There is a difference between good, great, and amazing and that is represented by the intensity assigned to a given word), the subjectivity or objectivity of the word and the context also. There are several such lexicons available. Following are a couple of popular ones:

- VADER (Valence Aware Dictionary and Sentiment Reasoner): Widely used in analyzing sentiment on social media text because it has been specifically attuned to analyze sentiments expressed in social media (as per the linked docs). It now comes out of the box in the Natural language toolkit, NLTK. VADER is sensitive to both polarity and intensity. Here is how to read the measurements:

- -4: extremely negative

- 4: extremely positive

- 0: Neutral or N/A

- VADER (Valence Aware Dictionary and Sentiment Reasoner): Widely used in analyzing sentiment on social media text because it has been specifically attuned to analyze sentiments expressed in social media (as per the linked docs). It now comes out of the box in the Natural language toolkit, NLTK. VADER is sensitive to both polarity and intensity. Here is how to read the measurements:

Examples (taken from the docs):

- TextBlob: This useful NLP library comes prepackaged with its sentiment analysis functionality. It is also based on NLTK. The sentiment property of the API/library returns polarity and subjectivity.

- Polarity range: -1.0 to 1.0

- Subjectivity range: 0.0 – 1.0 (0.0 is very objective, and 1.0 is very subjective)

- Example (from the docs):

- Problem instance: “Textblob is amazingly simple to use. What great fun!”

- Polarity: 39166666666666666

- Subjectivity: 0.39166666666666666

- Polarity: as mentioned earlier, it measures how positive or negative the given problem instance is. In other words, it is related to the emotion of the given text

- Subjectivity refers to opinions or views (can be allegations, expressions, or speculations) that need to be analyzed in the given context of the problem statement. The more subjective the instance is, the less objective it becomes, and vice versa. A subjective instance (e.g., a sentence) may or may not convey emotion. Examples:

- “Sam likes watching football”

- “Sam is driving to work”

- Sentiwordnet: This is also built into NLTK. It is used for opinion mining. This helps in deducing the polarity information from the given problem instance. SWN extends wordnet, a lexical database of words (the relationship between words, hence the term net), developed at Princeton and is a part of NLTK corpus. Here, I’d focus primarily on synsets, which are the logical groupings of nouns, verbs, adjectives, and adverbs into sets or collections of cognitive synonyms, hence the term synset (you can read more on Wordnet online.

- Coming back to Sentiwordnet and its relationship with Wordnet, Sentiwordnet assigns polarity to each synset.

Example:

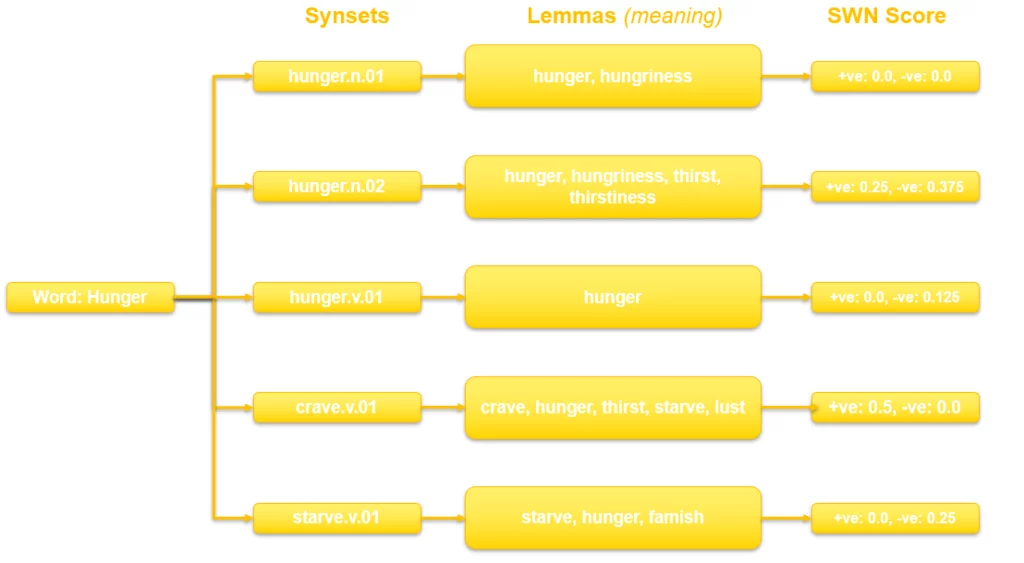

- Let’s take a word hunger

- The wordnet part:

- Get a list of all the synsets against hunger.

- The Sentiwordnet (swn) part:

- Get the polarity for all those systems.

- Use one or maybe a combination of all of them to determine the polarity score for that word (hunger in ours). The following diagram shows this flow.

Accelerate Insights with AI Analytics!

Let AlphaBOLD help you unlock advanced analytics to gain deeper insights and achieve superior results. Embark on your AI journey.

Request a Consultation- I am showing lemmas (meanings) only for illustrative purposes here; I find understanding the systems I am working on helpful.

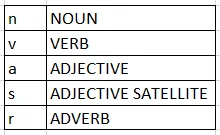

- Here is how to interpret the tags assigned to the given synsets:

- hunger: the word we need the polarity of

- n: part of speech (n = noun)

- 01: Usage (01: most common usage, onwards indicate lesser common usages)

- The following should guide in understanding the given grouping/synset of a word (as per the link).

Pros and cons of using the rule-based sentiment analysis approach.

I will take up the machine-learning-based approach in the second blog in this series. If you have any questions or queries, leave a comment below. You can also connect with our BOLDEnthusiasts by clicking here.

Explore Recent Blog Posts

1 thought on “Sentiment Analysis – The Lexicon Based Approach”

Comments are closed.