Building an enterprise-grade data integration pipeline is a lengthy process and requires many architectural considerations and guidelines. Often, businesses evolve so quickly that the IT team can’t keep up with the demands of the rapidly changing business environment. To address this issue, Microsoft has created a fully managed data preparation tool for Power BI that both developers and business users can use to connect to data sources and prepare data for reporting and visualizations.

What is Dataflow?

A dataflow can be deemed as a cloud based ETL (extract transform and load) pipeline used to get data from different data sources, transform the data by applying business rules, and then store it in a format that can be used for visualizations and reporting. It is a collection of entities that a developer or a business user can develop. It maps out the flow of information within a system.

Power BI Dataflow

Power BI Dataflow is a Power BI feature that allows businesses to unify data from several sources, prepare it for consumption, and publish it for users to consume in Power BI. It’s similar to Power Query, which runs in the cloud.

In Power Query, we perform transformations on our data limited to only that report. They cannot be reused for other Power BI reports. This can be quite frustrating as you must copy or rewrite the code every time you want to use the same logic for different reports. Also, a simple change in logic needs to be made manually for all the implemented reports.

In this case, Dataflows come to the rescue. You can design reusable data integration pipelines used in different Power BI reports using Power BI Dataflows. You can also share your dataflows with other people in your organization, and they can use them as well. This is useful because it eliminates any additional overhead when the logic gets changed. All downstream dataflows are automatically updated to reflect the new logic whenever you change your dataflow.

How does it work?

A dataflow collects information from several sources (more than 80 data sources are supported already). It then transforms the data using the dataflow engine, based on the transformations defined with the Power Query authoring experience. Finally, the data is loaded into the destination, a Power BI workspace, an Azure Data Lake Storage account for the organization, or a Power Platform environment.

In the case of dataflows, Power query scripts run independently of the report. There is no dataset bound to it. So, dataflow stores the data in Azure storage lake as a Common Data Model and manages the data lake configurations internally. You do not need any other account except your Power BI account and subscriptions.

Benefits of Dataflows

- Dataflows allow self-service data preparation, making the process more manageable. It requires no IT or developer background.

- Dataflows are capable of advanced transformations.

- Allows you to create a single version of truth; a centralized data warehouse which can be used for all reporting solutions.

- In a Power BI solution, a dataflow separates the data transformation layer from the modelling and visualization layer.

- Rather than being split across numerous artifacts, the data transformation code can be stored in a single dataflow.

- A dataflow creator only needs Power Query skills. The dataflow creator can be part of a team that produces the whole BI solution or operational application

- A dataflow is product agnostic. It’s not just a feature of Power BI; you can access its data from a variety of different tools and services.

- Runs in the cloud and requires no infrastructure management.

- Allows incremental Refresh (PBI Premium required)

Learn How Power BI Can Transform Your Data

Use Cases

A single Power Query Table in multiple Power BI reports

Whenever you feel the need to use one Power query table in multiple Power BI reports, Dataflows should always be your choice. You only need to perform transformations on a single table and that can be reused for all your reports that need the same logic to be implemented. Re-using tables or queries across multiple Power BI files is one of the best use cases of Dataflow.

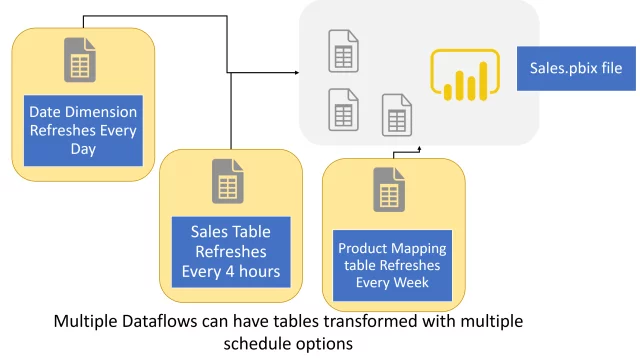

Different Data Source with Different Schedule Refreshes

Let’s suppose that you have a dataset that includes tables coming from different data sources and with different schedule options. One table needs to be refreshed once a week and the other to be refreshed once a day. So, for this you will have to refresh the report at maximum frequency, which is once a day, even though the other table doesn’t require daily refreshments.

Dataflows can run transformations on a different schedule for every query. You can create multiple dataflows for multiple tables and then schedule them according to your requirements. For the above-mentioned example you can create two dataflows transforming data for those two tables separately and then load them in the destination table. These dataflows can be scheduled according to the given requirements making the solution easier and more efficient.

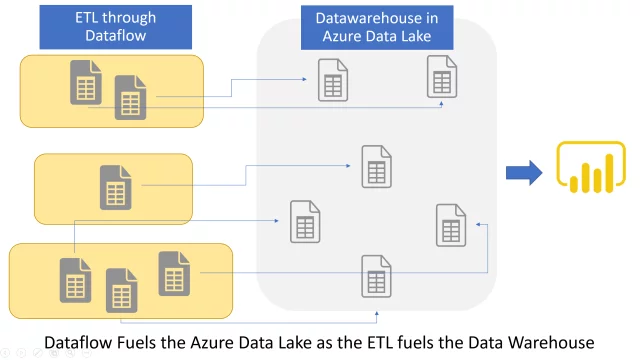

Build Data Warehouse

Dataflows helps you to build a centralized data warehouse. As the number of BI systems is increasing, the need for centralized data warehouses is also escalating. In BI, creation of a centralized data warehouse is one of the most important phases. It stores data in a way that is required for reporting by BI Analysts.

In a dataflow, Power Query scripts might help you create the structure you want. A dataflow will then run those scripts, extract data from data sources, and will store data into destination table which acts as a centralized data warehouse. This data warehouse can be used as a single source of truth for all the reporting solutions.

Conclusion

In this blog, we have discussed Power BI Dataflows, their benefits and use cases. Power BI dataflow is a self-service data integration tool provided in the Power BI service that can be used to gather data from various data sources and generate a cloud data model based on the dataset’s schema. The dataflows have the advantage of being reusable inside the organization, allowing you to design modular ETL pipelines to prepare your dataset.