Introduction

When working with huge datasets in Power BI, we often get stuck with battling performance issues. This can take a lot of our time, which otherwise could be used for report development.

We usually face these issues because of loading big data models in Power BI

- Slow data refreshes

- Higher latency reporting (report gets slow and sluggish)

- Getting a different license capacity for storing the model. Power BI Pro license only allows 1 GB data, whereas Premium allows 13 GB data in size.

- More pressure on source system and capacity resources.

- Slower calculation evaluations

- User dissatisfaction

Reducing the dataset size can improve performance and bring faster upload speeds to the Power BI service.

Empower your Business With Power BI - Explore Further!

Struggling with large datasets in Power BI? Let AlphaBOLD's team of experts assist you in optimizing your Power BI performance.

Request a DemoPower BI Vertipaq Analyzer

Previously, we discussed the Vertipaq Engine how it stores data and performs compression to reduce the memory usage of your data model.

Recommended Reading:

To find out more about the Vertipaq Engine.

In this blog we are going to explore:

- What Vertipaq Analyzer is

- Vertipaq Analyzer features and functions

- How it helps in reduce data size in Power BI

What Is Vertipaq Analyzer?

Vertipaq Analyzer is a helpful tool used to collect size information for all the objects in a database. It allows you to investigate detailed information according to the structure and compression of your model.

Learn About The Brain & Muscles Of PowerBI (Vertipaq Engine)

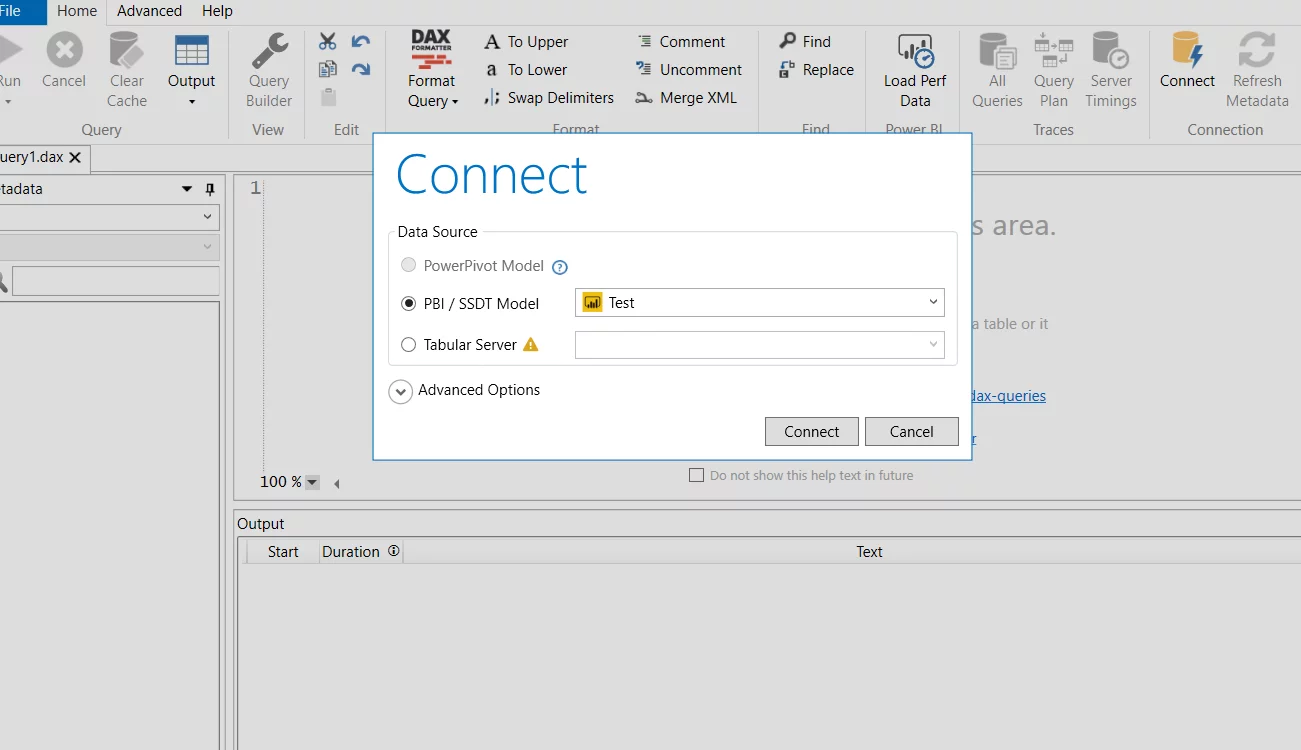

You can connect to your Power BI desktop from DAX Studio to see detailed information bout your data model. This visualization hierarchically shows the table and column information.

To demonstrate, we are going to connect to our sample PBI desktop file ‘TEST’ from DAX studio. We want to find out what columns consume the most space in this model and how we can to reduce its data model size.

The current size of ‘Test’ file is 28,783 KB.

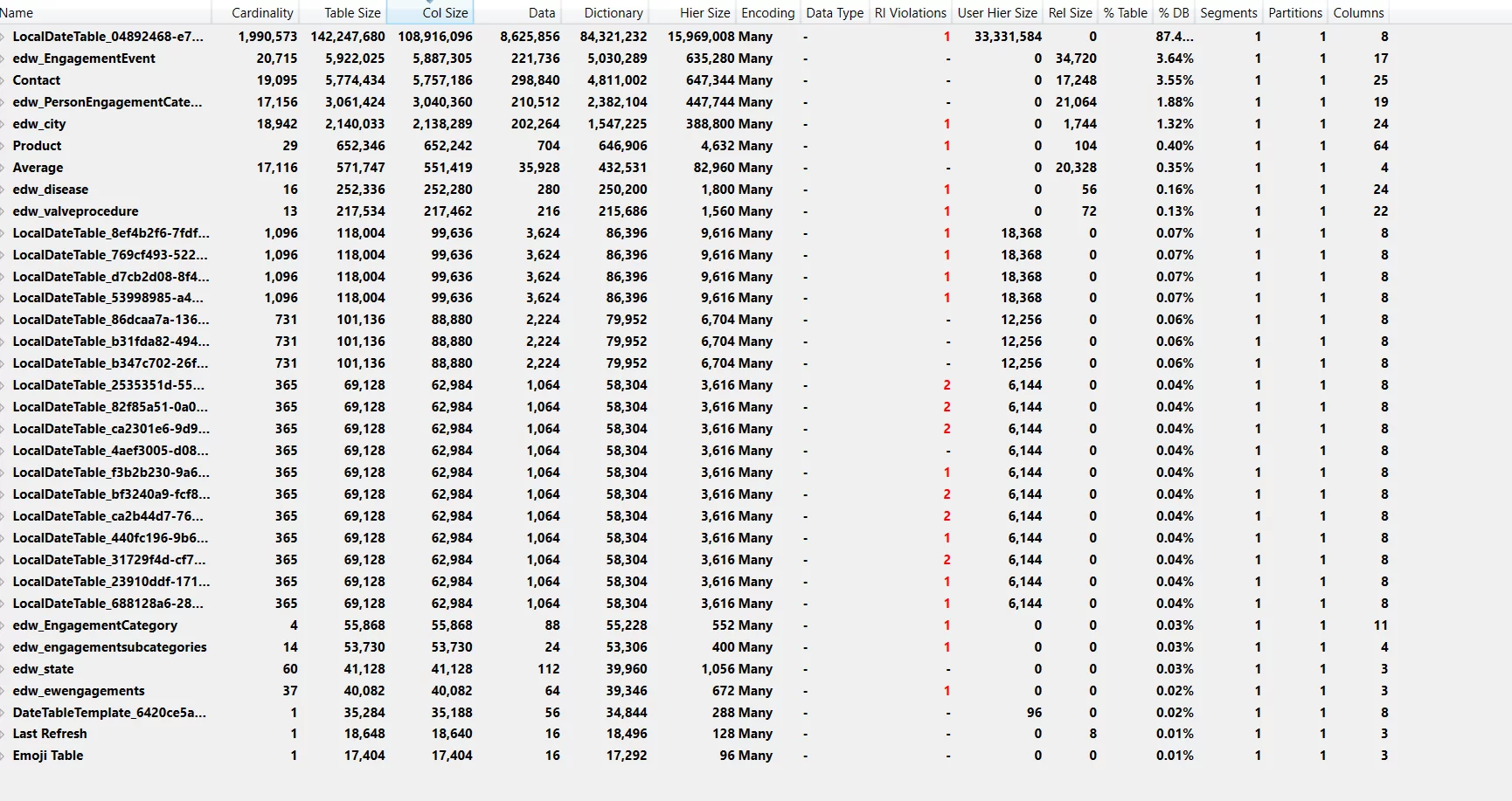

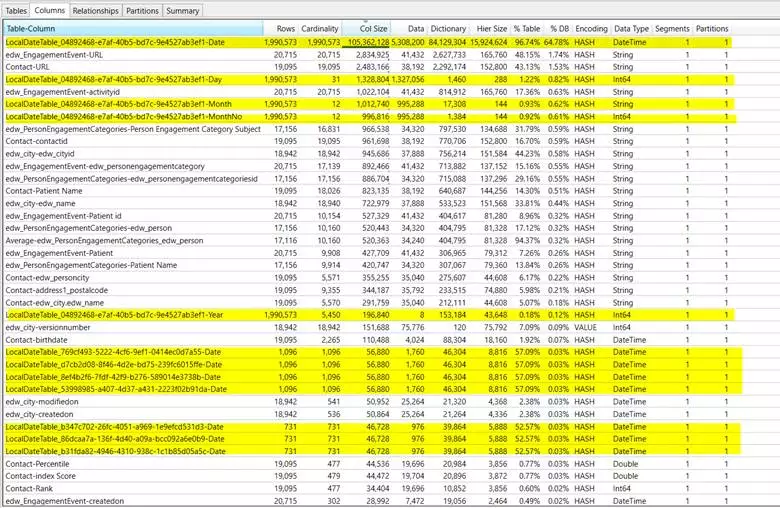

After connecting to PBI Desktop, we can analyze the complete metadata of the tables as shown below.

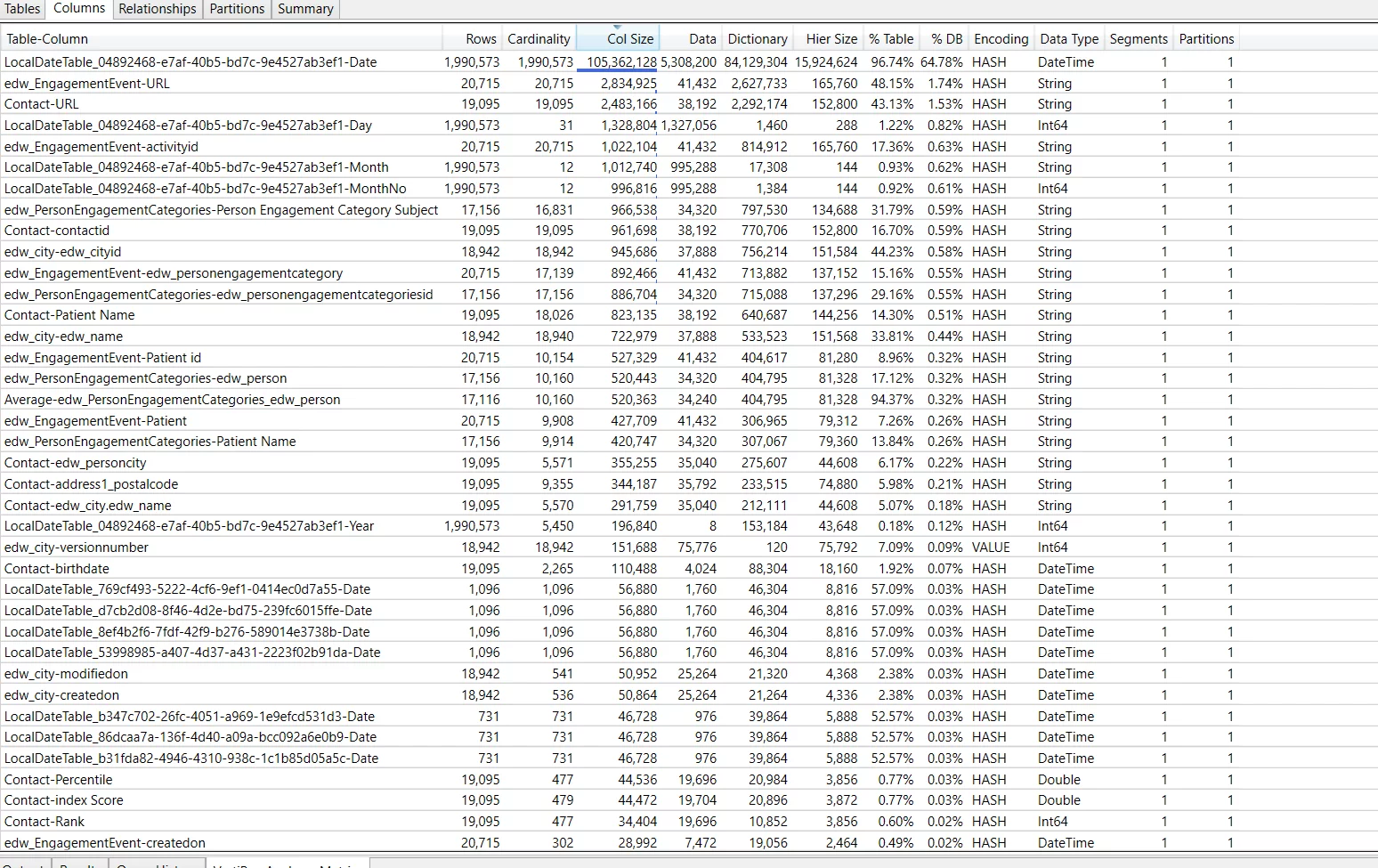

You can also see detailed column information, regardless of the table they belong to, as shown below.

It can help identify the most expensive columns with high cardinality. So, you can decide if you want to discard a few or keep them all.

By looking at this detailed information from Vertipaq Analyzer, you can easily decide which of the following techniques you should apply when trying to reduce the data model size.

Basic Techniques For Data Compression

Below are five techniques that can help reduce the size of your dataset and make sure that your model stays compact while delivering all the insights.

- Manage Date Time Columns

- Delete Unneeded Columns

- Remove Unnecessary Rows

- Amend Column Types

- Create New Columns in Power Query instead of using Calculated Column in DAX

Technique # 1: Manage Date Time Columns

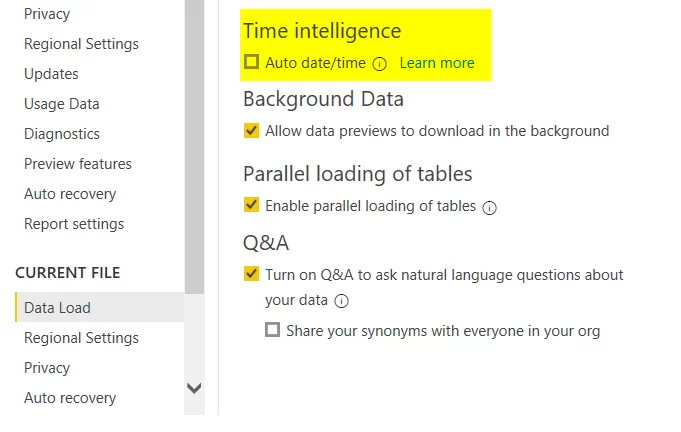

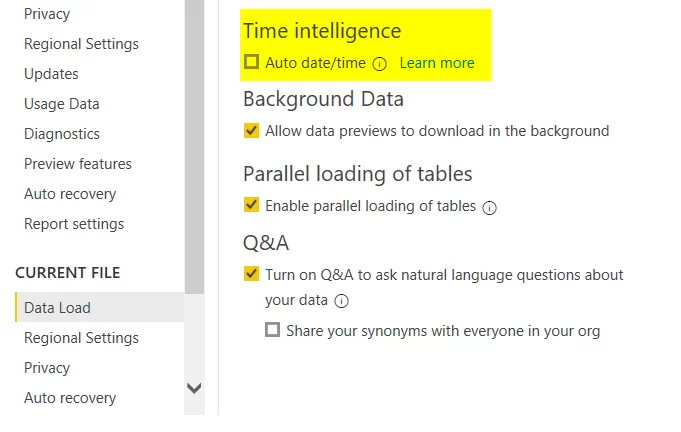

One of the important Date/Time techniques is to disable Auto date/time. Whenever it is enabled, it creates date/timetables for date columns. These tables are hidden and are, in fact, calculated tables. That’s why they increase the model size. So, it’s very important to disable this to reduce the report in size.

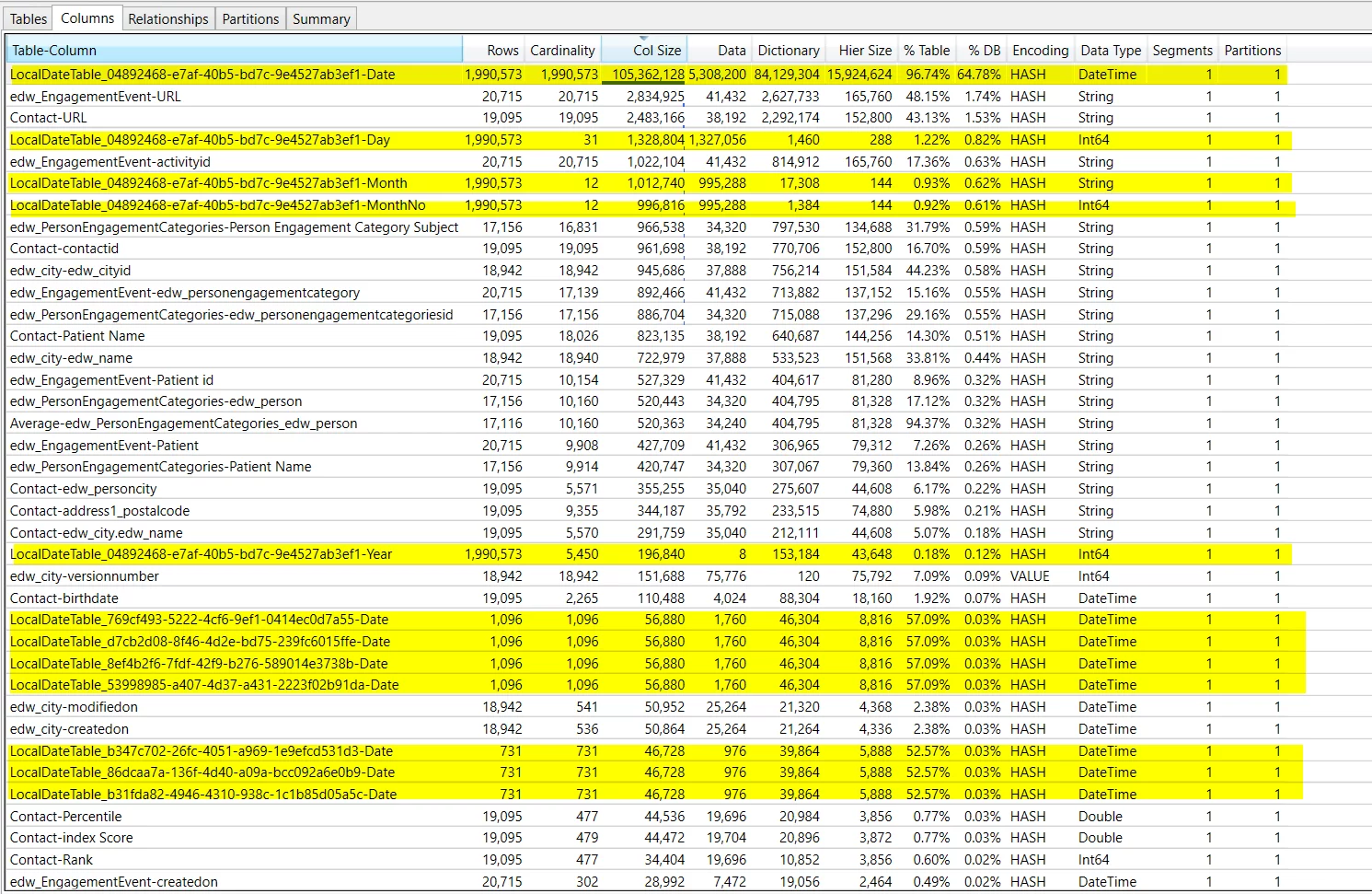

In the following image, you can see how big the size of these columns is and how they are contributing to the model size.

Uncheck the setting as below in PBI Desktop to turn auto date/time off.

After saving this change, the model size reduces by up to 65%!

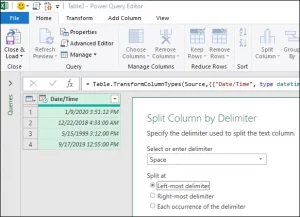

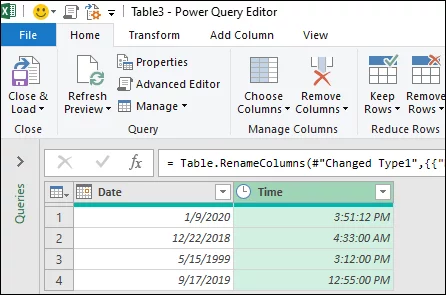

Another technique to reduce dataset size through managing date/time column is to split them. Power BI creates a date hierarchy for each date and date-time column. Dates always show up with time by default in Power BI. This can consume a lot of memory. In the Date Time column, the time value contains a fraction of milliseconds and consumes large amounts of memory for data storage. The best way to cut down on characters is to convert the Date Time column to date. If time values are required in the report, then we can split the Date Time column into two columns and use time values from the time column.

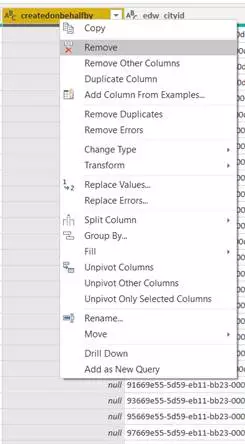

Technique # 2: Delete Unneeded Columns

Whenever we get data in Power BI, many columns are not used while creating the dashboards. So, the fastest way to reduce your dataset is to remove the unwanted columns and limit them to the ones you need. Either you can exclude them from the source directly, or do so using Power Query. Go through all the columns in the Power Query and delete those not in use in any of your reports or calculations.

If the requirements get changed in the future; you can simply add new columns as adding columns is much easier than deleting them.

Technique # 3: Remove Unnecessary Rows

Similarly, keep only those rows you need. Add only relevant and required data to the tables. Limiting the number of rows in a dataset is also known as Horizontal Filtering. You can filter out rows by entity or by time.

Filtering by entity involves two things:

- Omitting categories outside the scope of your analysis.

- Only loading a subset of source data into the model.

Filtering by time involves:

Limiting the amount of data to a few years as business context might have changed and including that older data may produce inaccurate results.

Technique # 4: Amend Column Types

Converting text columns to numeric can reduce memory usage significantly as integers are faster to operate and consumes less memory. So, you should convert any text column exclusively having numbers, e.g., Sales Order number, Invoice number etc. to numeric whenever it’s possible.

If there are integers with high precision, i.e., by default, it can show up to 15 digits, convert their data type to Fixed Decimal Number as it takes less memory and is more efficient. Lastly, if there are columns with values TRUE and FALSE, they should be converted to binary digits, i.e., 1 and 0.

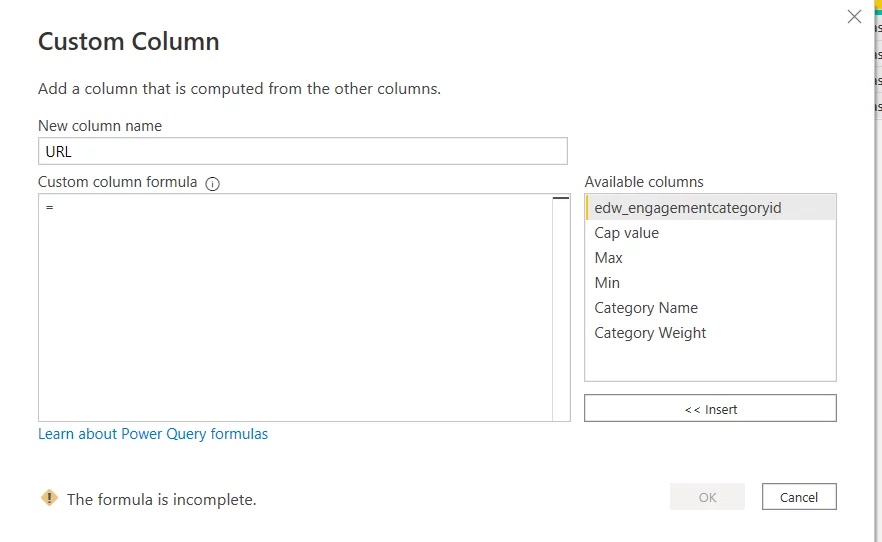

Technique # 5: Create Custom Columns In Power Query

In Power BI, the best practice is to create most of the calculated columns as Power Query computed columns in M language. The Vertipaq Engine stores both, the calculated columns (defined in DAX) and Power Query computed columns (defined in M language) in the same way. Still, the difference lies in how their data structures are stored. Due to this, issues like poor efficiency and extended data refresh times arise if you create columns through DAX. It is, therefore, less efficient to add columns as calculated columns as compared to Power Query computed columns.

However, there are some known exceptions, i.e., you can create certain calculated columns only through DAX. But it’s preferable to create them in Power Query as they can help you achieve greater load efficiency.

So, we reduced almost 75% of our data model size following the aforementioned techniques.

Transform Your Power BI Experience with AlphaBOLD

Maximize your Power BI capabilities with AlphaBOLD's expert services. Let us help you transform your Power BI experience.

Request a DemoConclusion

There are several ways to optimize a large data model in Power BI. In this blog, we have discussed some of the common techniques that will help reduce the size of your data model. You can try each of these and see which works best for you as it varies from dataset to dataset that which technique is more useful. And it’s all worth it as it helps you reduce your data model size, and you also get to learn a lot on the way.

Explore Recent Blog Posts