Introduction

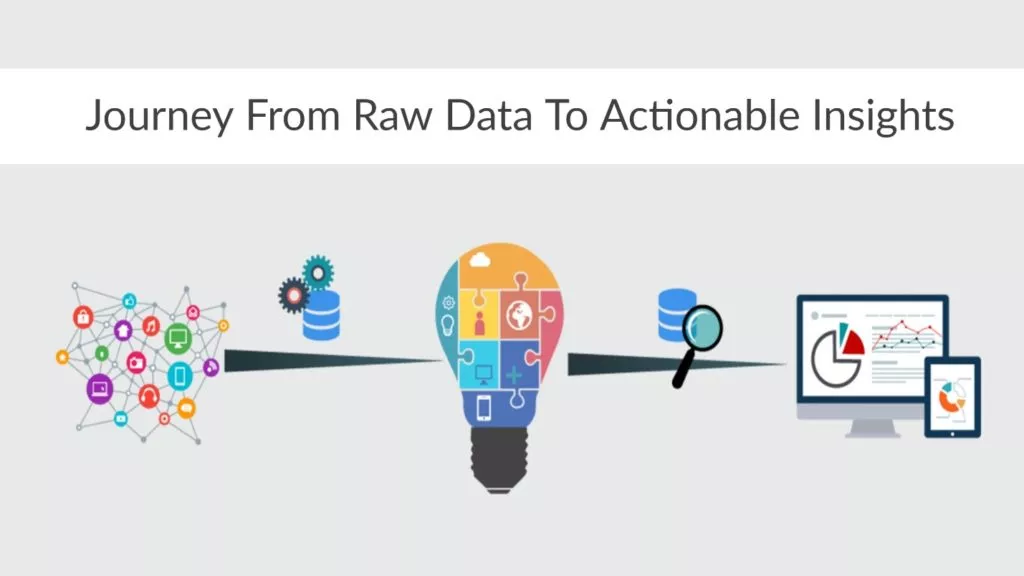

The age of (Internet of Things) IOT has ushered us into an era of a data-driven approach toward finding better solutions or providing direction in decision-making. According to “Domo,” we have been creating 2.5 quintillion bytes of data daily.

Is all this data useful?

No.

Can this data be used directly?

No.

So, this is where feature engineering comes into play.

What Is Feature Engineering?

Consider feature engineering as a puzzle, except not every piece fits. This means there is no objective solution to this. This means that from a plethora of features, the one that produces the most optimal features can be employed. So, a feature refers to the data relevant to the problem at hand and can contribute towards finding a better solution.

A data engineer would hardly come across real-life data that could be organized and structured to this extent. Before this raw data can be consumed by a machine learning algorithm, utilized in business intelligence reporting, or employed for any purpose, it must be converted into a structured format.

This process of transforming raw data into useful features is known as feature engineering.

This brings us to the question: why should we go about this if there is no correct solution?

Further Reading: Feature Engineering Process: A Comprehensive Guide – Part A

Drive IoT Success with Feature Engineering Excellence

Achieve excellence in your IoT initiatives through AlphaBOLD's expert application of feature engineering practices. Enhance your data's value and drive impactful IoT solutions.

Request a ConsultationNecessity Of Feature Engineering

Since there is no correct solution, we need to find empirical evidence to show that the proposed solution will be the most optimal and relevant. The selected feature set and its mathematical transformations contribute immensely towards the reliability of the machine learning model. In fact, the situation where the incorrect feature is selected is known as “Garbage in-Garbage out,” which, as the name suggests, results in incorrect, unreliable, and outright incorrect results.

There are a few techniques that can be utilized to avoid such situations. We will briefly skim over a few and then go into detail in a later article. The following are some of these techniques:

- Handling missing values: No data is perfect. There is almost always the predicament of missing values. To use a dataset, we need to remove or impute these missing values from the dataset. Averaging is one way to go. There are others with their applicability and shortcomings

- Outlier Detection: Like missing values, datasets may also have outliers. This can be due to a malfunctioning device, environmental swings, or an extremely rare occurrence. Either way, such data may sway the results.

- Data Transformation: Data transformations are another essential part of data engineering. As mentioned earlier, data will not necessarily be in the most optimal state by default and may need to be transformed. Log-based transformation often comes in handy to normalize the data, but there are other ways to transform it depending on the data and its intent.

Further Reading: Feature Engineering Process: A Comprehensive Guide – Part B

Innovate with IoT Services Enhanced by Feature Engineering

AlphaBOLD's IoT Services, combined with the latest practices in feature engineering, offer unparalleled innovation opportunities. Let us help you harness the power of your IoT ecosystem for enhanced operational efficiency.

Request a ConsultationConclusion

Explore Recent Blog Posts